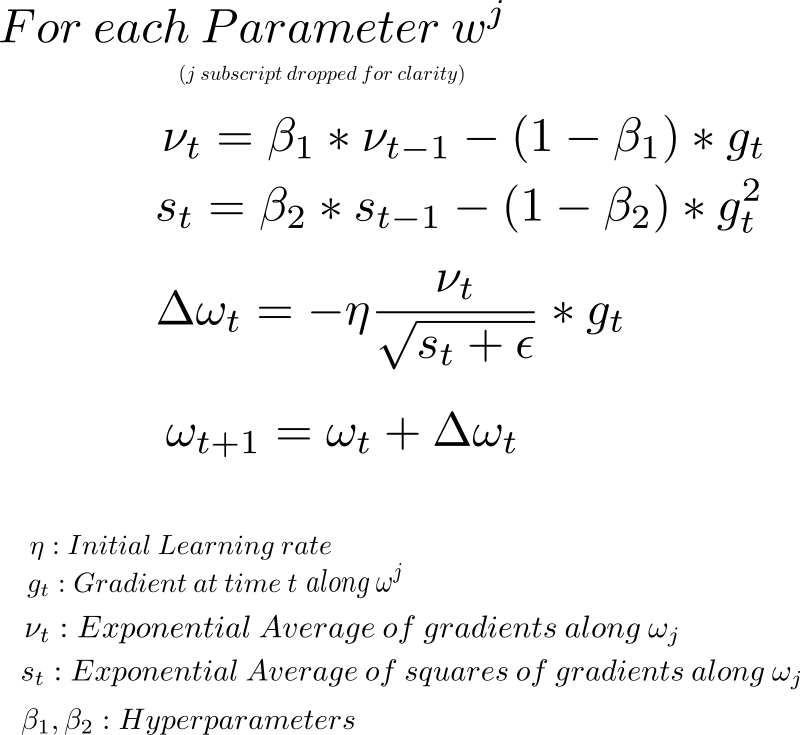

10 Stochastic Gradient Descent Optimisation Algorithms + Cheatsheet | by Raimi Karim | Towards Data Science

Paper repro: “Learning to Learn by Gradient Descent by Gradient Descent” | by Adrien Lucas Ecoffet | Becoming Human: Artificial Intelligence Magazine

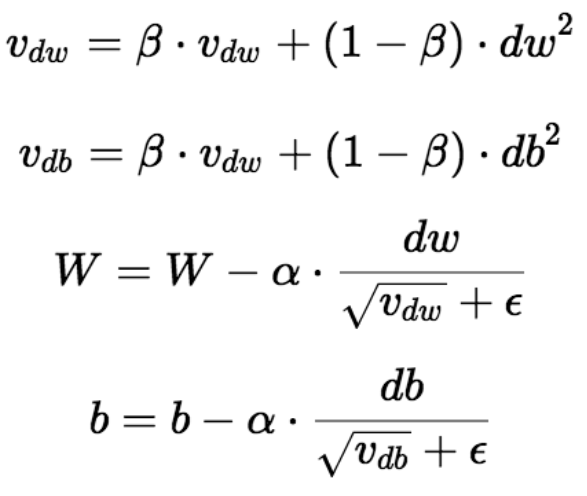

GitHub - soundsinteresting/RMSprop: The official implementation of the paper "RMSprop can converge with proper hyper-parameter"

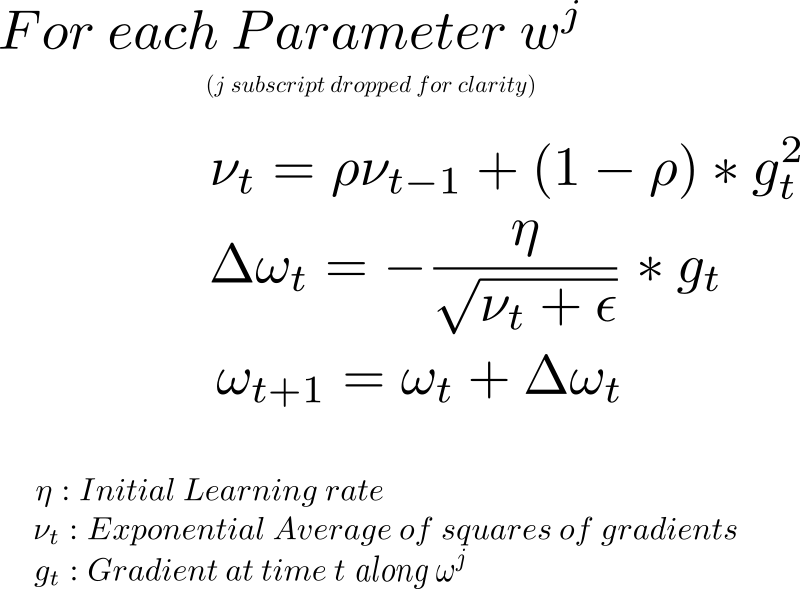

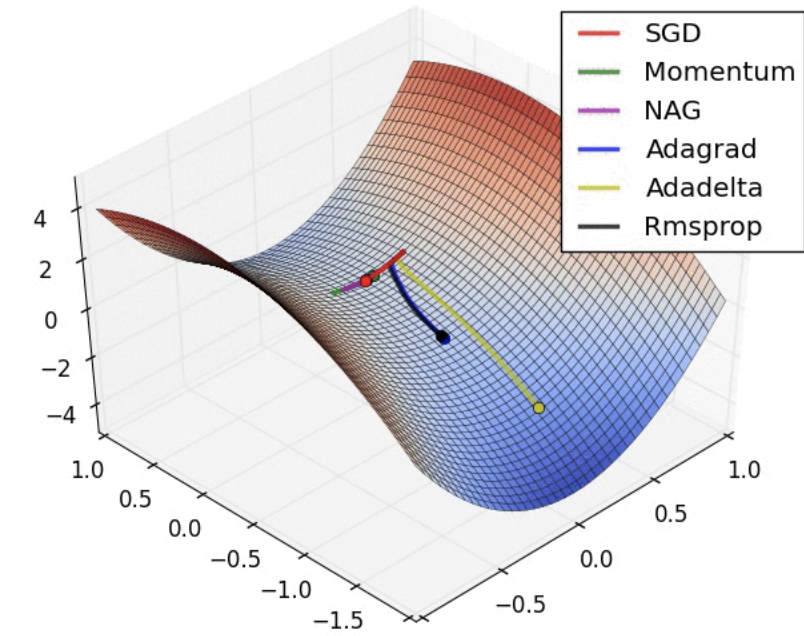

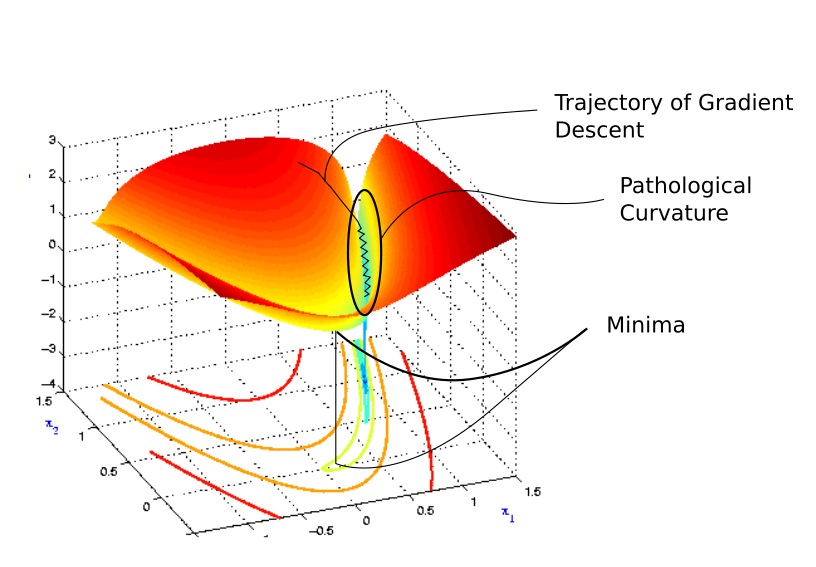

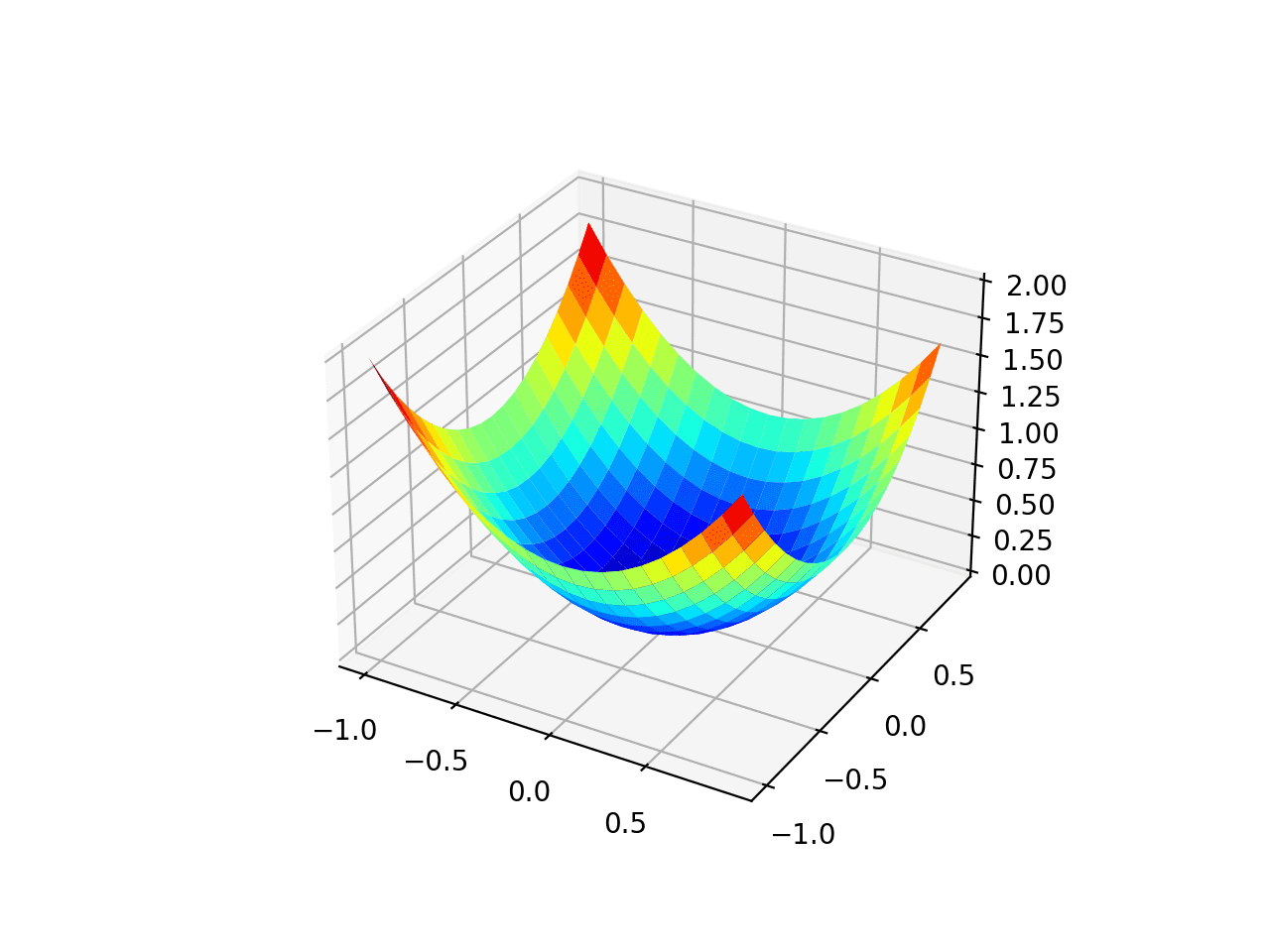

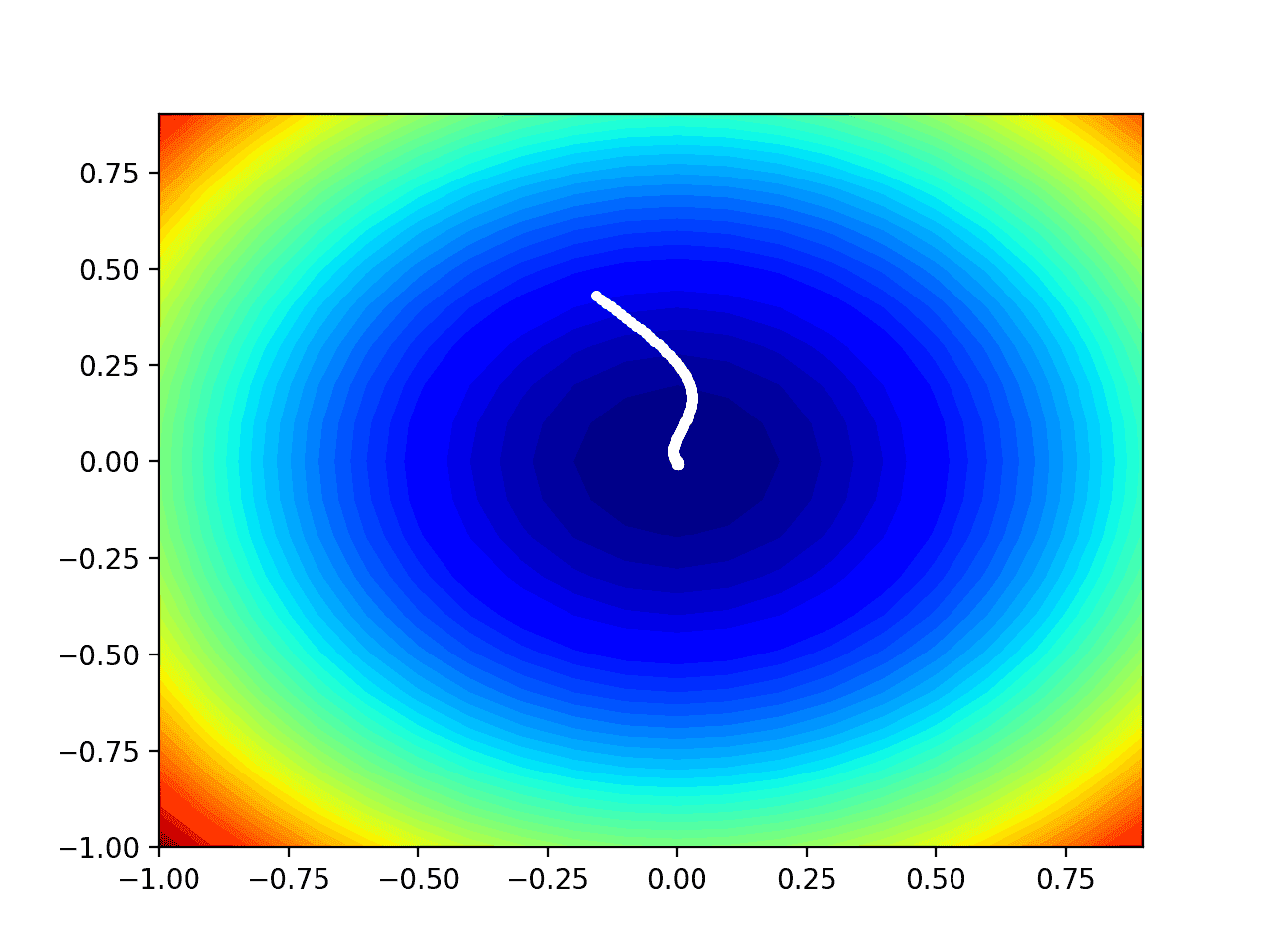

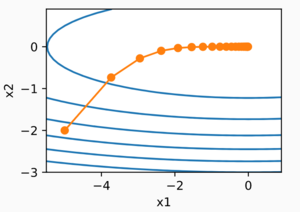

A Visual Explanation of Gradient Descent Methods (Momentum, AdaGrad, RMSProp, Adam) | by Lili Jiang | Towards Data Science

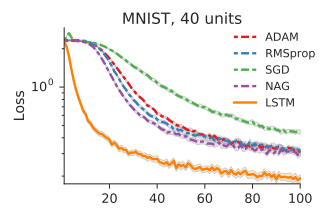

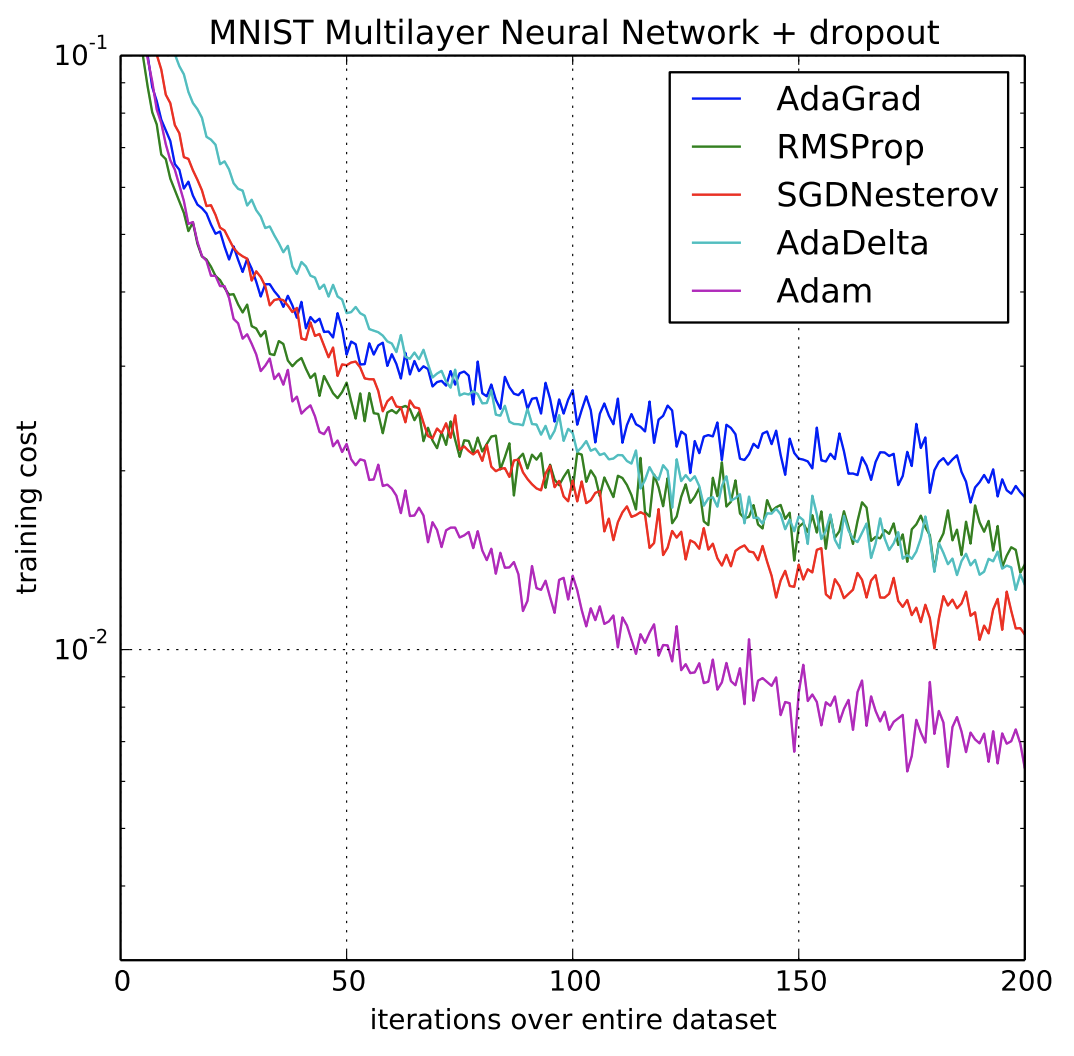

![PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex Optimization and an Empirical Comparison to Nesterov Acceleration | Semantic Scholar PDF] Convergence Guarantees for RMSProp and ADAM in Non-Convex Optimization and an Empirical Comparison to Nesterov Acceleration | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f0a8159948d0b5d5035980c97b88038d444a1454/8-Figure1-1.png)

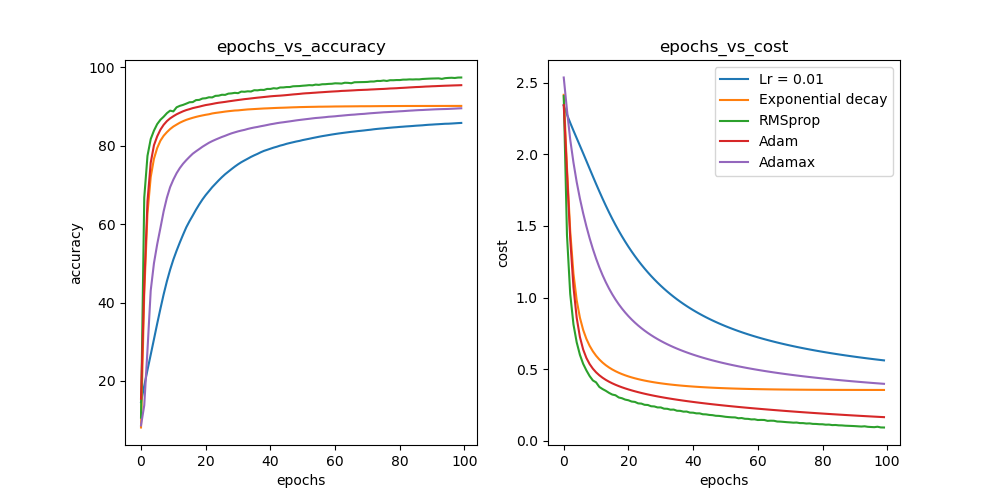

![PDF] Variants of RMSProp and Adagrad with Logarithmic Regret Bounds | Semantic Scholar PDF] Variants of RMSProp and Adagrad with Logarithmic Regret Bounds | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/431b37402a5ccf52ee48d2ca2bcaaec54a827b08/7-Figure2-1.png)